12. Rates of Growth of Functions

CAUTION - CHAPTER UNDER CONSTRUCTION!

NOTICE: If you are enrolled in the CSC 230 course that I teach at SFSU, you need to use the “CSC230 version” of the book, which I will be updating throughout the semester. The version you are looking at now is the “public version” of the book that will not be updated again before the start of June 2026.

This chapter was last updated on April 14, 2025.

Contents locked until 11:59 p.m. Pacific Standard Time on May 23, 2025.

You have seen that some tasks can be completed by more than one algorithm. Two questions to ask are

-

"How do you choose which algorithm to use?"

-

"Why is is it important to make such a choice?"

This chapter will discuss tools you can use to help answer these questions. In particular, ways of comparing the rates of growth of functions will allow us to compare how two algorithms perform as the size of their input increase with no upper bound.

Key terms and concepts covered in this chapter:

-

Complexity

-

Big-\(\Theta\) notation

-

Big-\(O\) notation

12.1. Complexity of Algorithms

In order to implement an algorithm, there are issues of the space needed to do the work and the time needed to complete all the steps.

For example, imagine that you are asked to complete a few Algebra homework exercises by hand, and each exercise involves solving linear equations by hand using paper and pencil. Now suppose that "few" means "one hundred" and that each linear equation involves multiple steps to solve like the one below. \[ \text{Exercise 1. Solve for } x \text{: } 40(x+6)-9(3x+5) = 65(x+7)-(7x+132)\] It is not difficult to solve linear equations like this one because it is clear what steps you need to use… but it is tedious and will likely require a lot of paper! That is, it will consume a lot of time and space to solve even the first one of these equations, and you’ll have only ninety-nine more to do after that!

In this textbook, the focus will be on time complexity and asymptotics, that is, the comparison of the time needed by the algorithms as the size of the input becomes larger and larger without any bound.

12.2. The Order of a Function and Big Theta Notation

In this section, we will define a relation that describes what it means to say that "two functions grow at the same rate." More precisely, "two functions grow at the same rate, asymptotically, as the input variable grows without an upper bound." We will also introduce big \(\Theta\) notation.

Note: \(\Theta\) is the uppercase Greek letter "Theta."

We have the following theorem about the "has the exact same order as" relation.

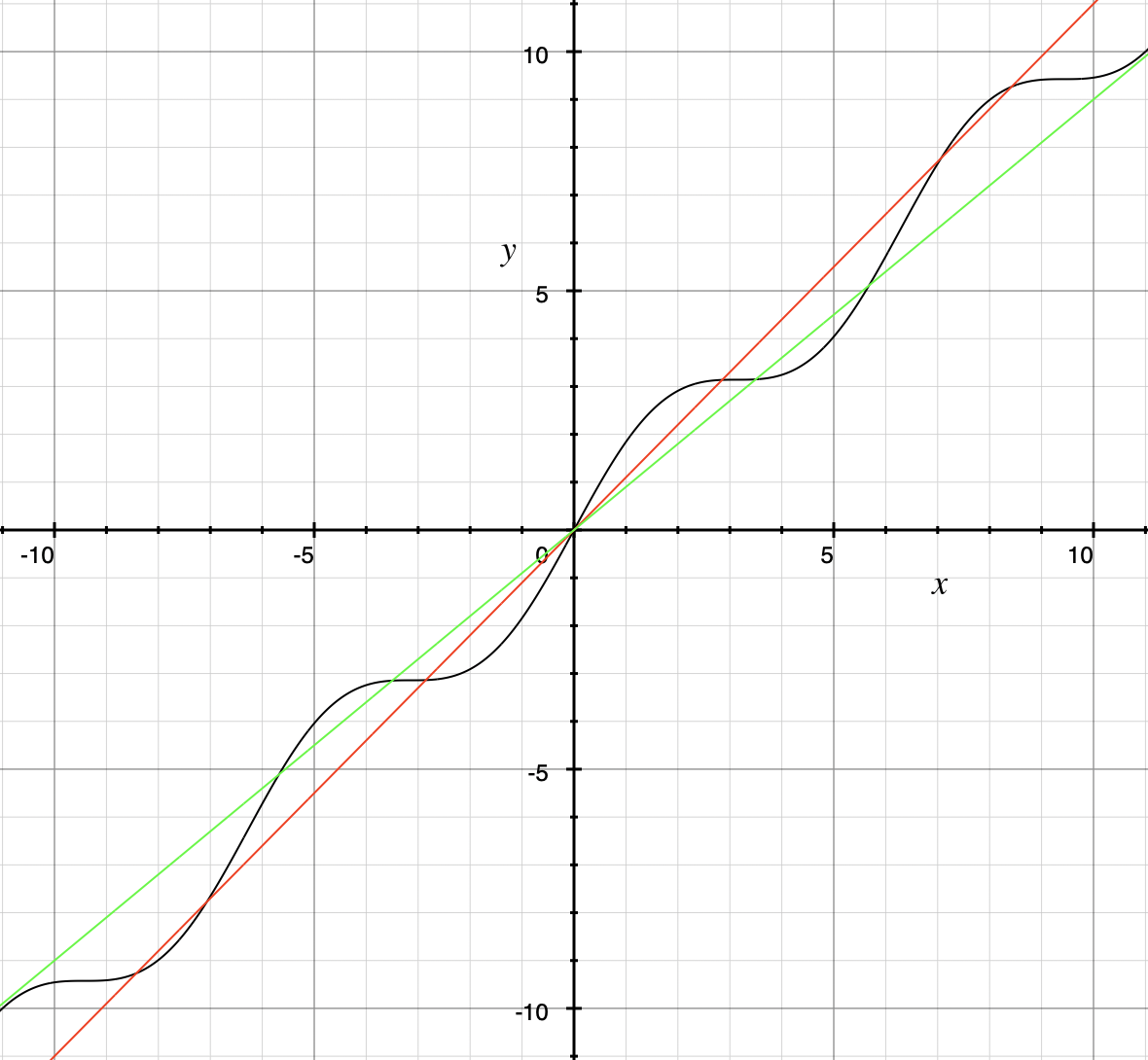

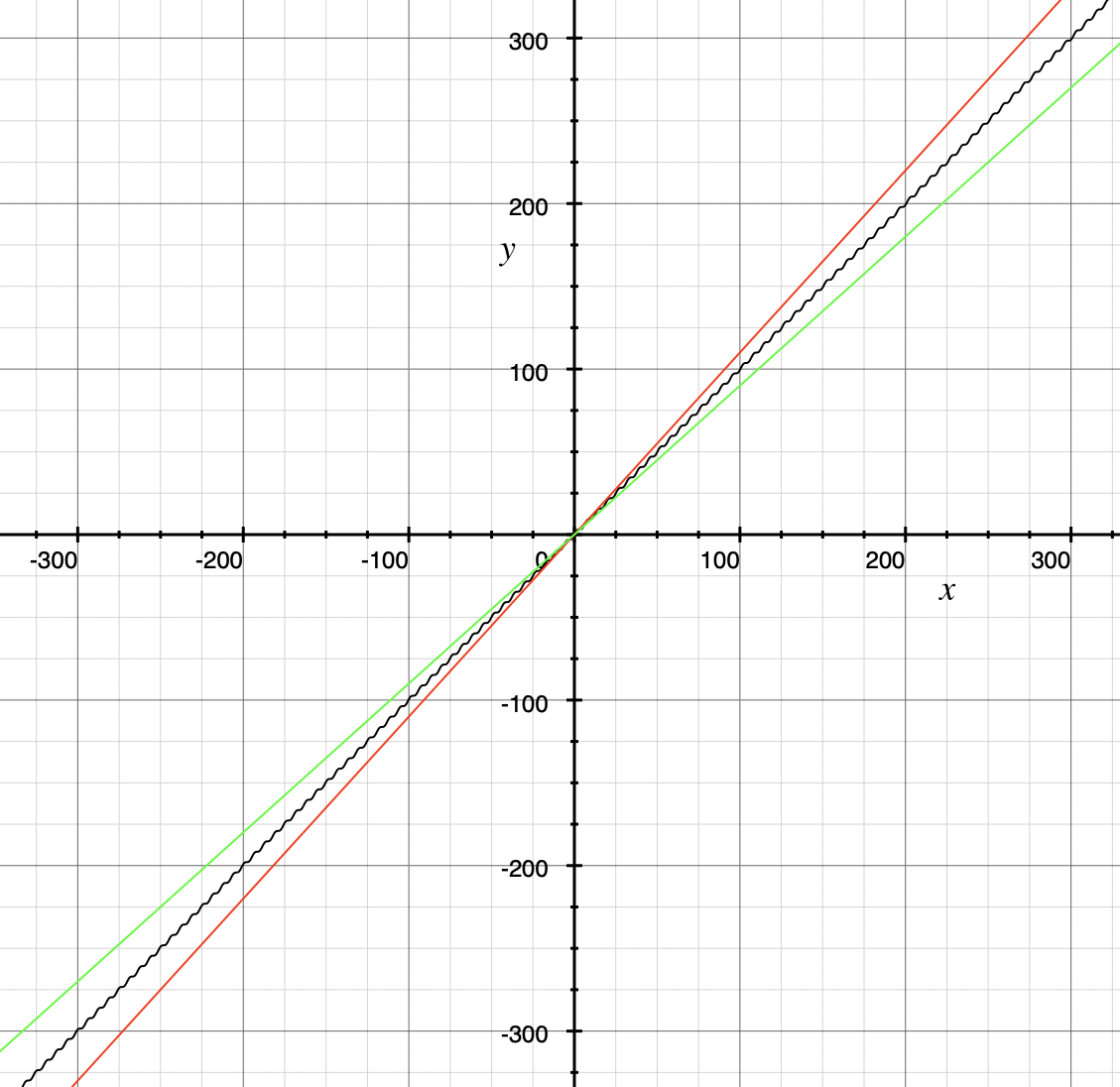

These three properties let you conclude that the "has the exact same order as" relation is an equivalence relation, so the relation partitions the set \(S = \{ f \, | \, f \text{ is a function with domain and codomain } \mathbb{R} \}\) into disjoint sets. For each function \(g \in S\) we can define \(\Theta(g)\) to be the equivalence class \[ \Theta(g) = \{ f \, | \, f \text{ has the exact same order as } g \} \] Every function with domain and codomain \(\mathbb{R}\) is an element of at least one of the \(\Theta(g)\) and for any two functions \(g\) and \(h,\) the sets \(\Theta(g)\) and \(\Theta(h)\) must either be equal or have empty intersection. For example, the earlier example shows that \(\Theta(x + \sin x)\) and \(\Theta(x)\) are the same set, so we can say that the function \(f(x) = x + \sin x\) is of linear order.

|

Mathematicians and computer scientists are very different beasts… well, they are all

human

but they have developed different

cultures

so they often use the same symbols in different ways.

A mathematician, like the author of the Remix, would write the very formal \(f \in \Theta(g)\) and state " f is an element of Theta g " to mean that " f has the exact same order as g. " In the earlier example, a mathematician could abbreviate this a little bit and write "\(x + \sin(x)\) is in \(\Theta(x).\)" Computer scientists have traditionally written this relation as \(f(x) = \Theta(g(x))\) and state "\(f(x)\) is big Theta of \(g(x)\)." In the earlier example, a computer scientist could write "\(x + \sin(x) = \Theta(x)\)." As a mathematician, I need to point out that the function f is not equal, in the mathematical sense, to the equivalence class containing g because it’s just one of the infinitely many functions in that equivalence class. I believe that both mathematicians and computer scientists agree that Θ( g ( x )) = f ( x ) is just too hideous a notation to use… so please do not ever, ever use it! |

12.3. Big O notation

Traditionally, computer scientists are much more interested in the idea that " f grows at most at the rate of g ". This corresponds to the second part of the inequality used to define big Theta in the previous section.

Note that Big O only gives an upper bound on the growth rate of functions. That is, the function \(f(x) = x + \sin(x)\) with domain and range \(\mathbb{R},\) used in an earlier example, is \(O(x)\) but also is \(O(x^{2})\) and is \(O(2^{x}).\)

Big O is typically used to analyze the worst case complexity of an algorithm. If, for example, \(n\) is the size of the input, then big O really only cares about what happens in the "worst-case" when \(n\) becomes arbitrarily large. Mathematically, we want to consider time complexity in this asymptotic sense, when \(n\) is arbitrarily large, so may ignore constants. That we can ignore constants will make sense after discussing how limits, borrowed from continuous mathematics (that is, calculus), can be used to compare the rates of growth of two different functions.

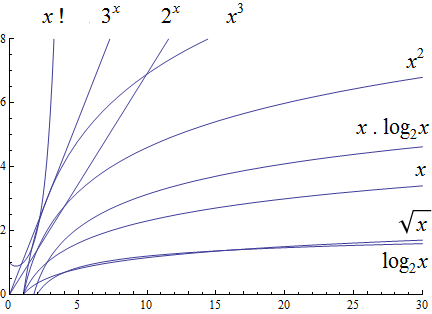

12.3.1. Common Complexities To Consider

The size of the input complexities most commonly used, ordered from smallest to largest, are as follows.

-

Constant Complexity: \(O(1)\)

-

Logarithmic Complexity: \(O(\log (n))\),

-

Radical complexity : \(O(\sqrt{n})\)

-

Linear Complexity: \(O(n)\)

-

Linearithmic Complexity: \(O(n\log (n))\),

-

Quadratic complexity: \(O(n^2)\)

-

Cubic complexity: \(O(n^3)\),

-

Exponential complexity: \(O(b^n)\), \( b > 1\)

-

Factorial complexity: \( O(n!)\)

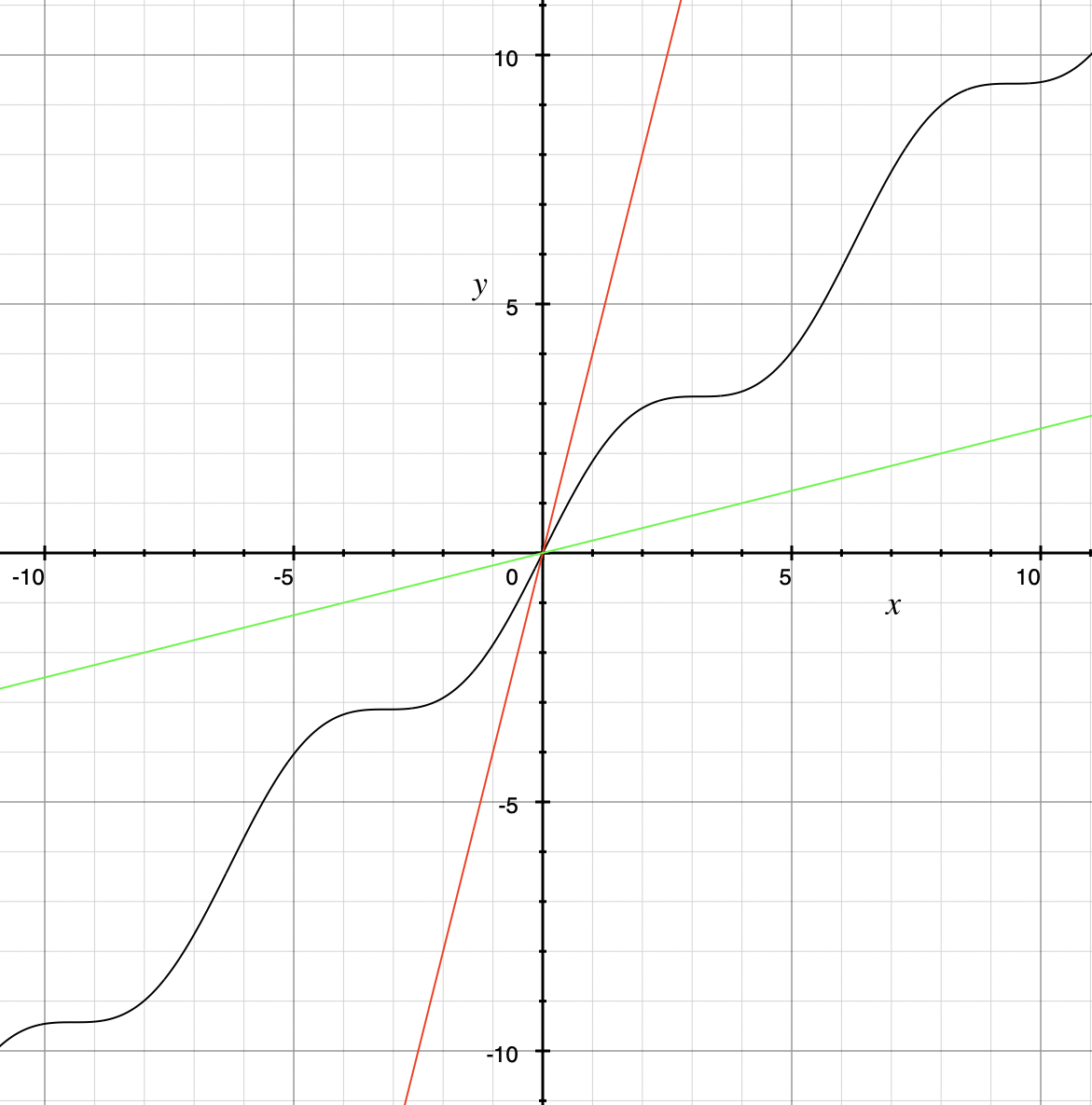

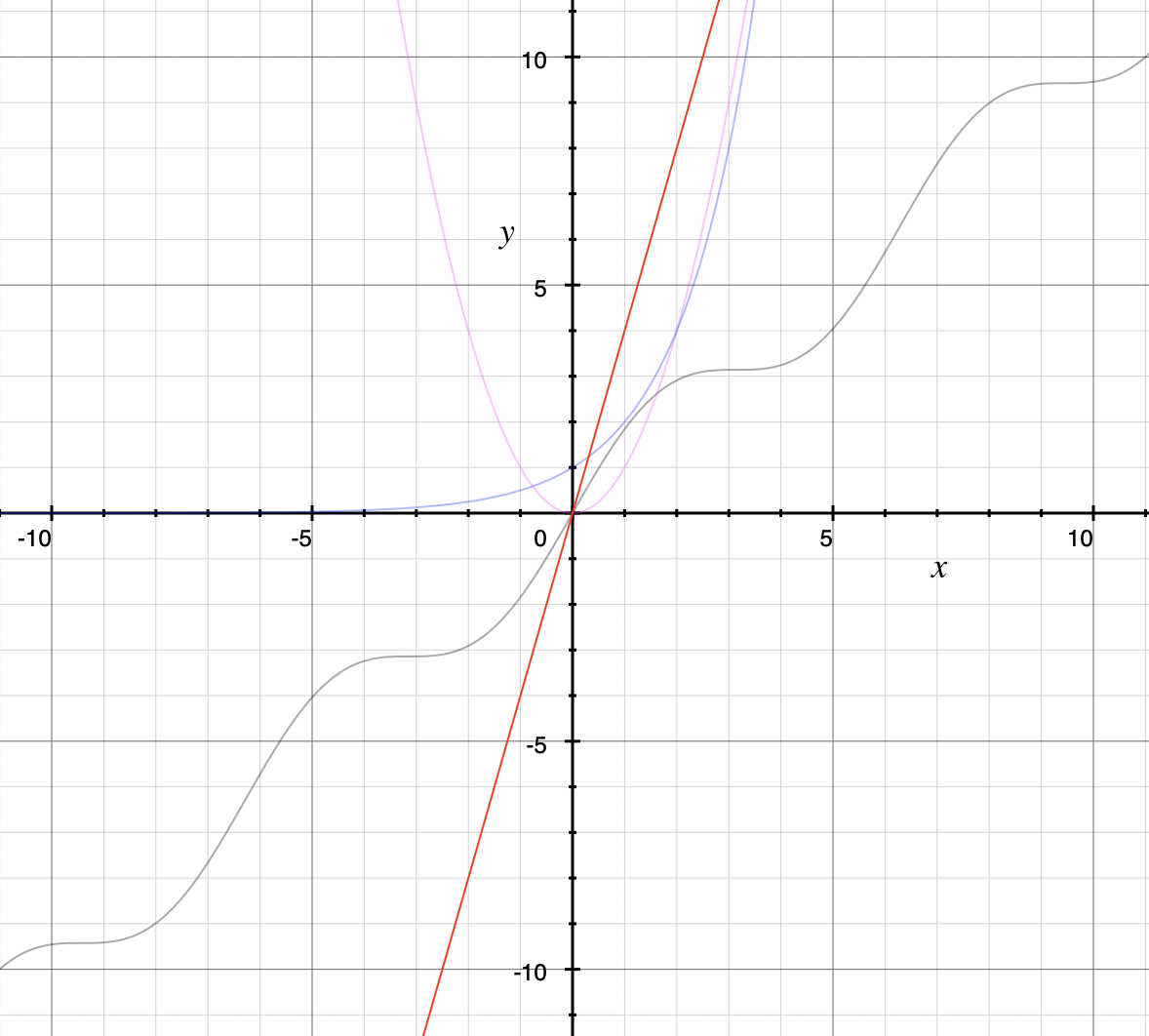

To understand the sizes of input complexities, we will look at the graphs of functions; it is easier to consider these functions as ones that are defined for any real value input instead of just the natural numbers. This will also allow us to use continuous mathematics (that is, calculus) to analyze and compare the growth of different functions.

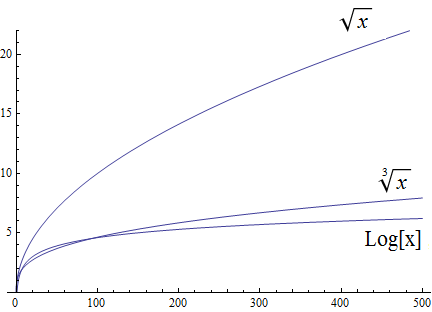

Radical growth is larger than logarithmic growth:

| In the preceding graph, we’ve used \(\text{Log}[x\)] to label the graph of a logarithmic function without stating the base for the logarithm: Is this the function \(y = log_{2}(x)\), \(y = log_{10}(x)\), \(y = ln(x) = log_{e}(x)\), or a logarithm to some other base? For the purposes of studying growth of functions, it does not matter which of these logarithms we use: You may recall that one of the properties of logarithms states that for two different positive constant bases \(a\) and \(b\) we must have \(log_{a}(x) = log_{a}(b) \cdot log_{b}(x)\), where \(log_{a}(b)\) is also a constant. As stated earlier, we may ignore constants when considering the growth of functions. |

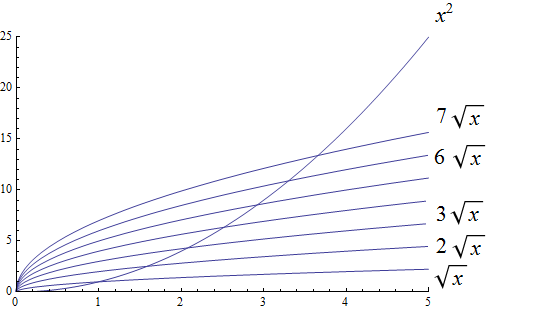

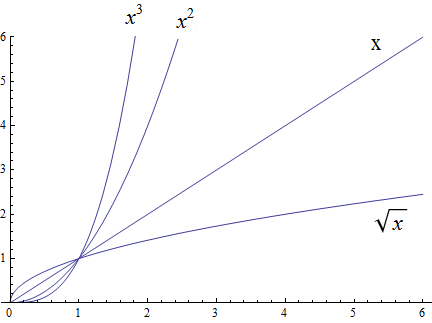

Polynomial growth is larger than radical growth:

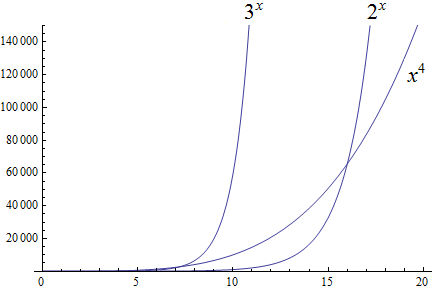

Exponential growth is larger than polynomial growth:

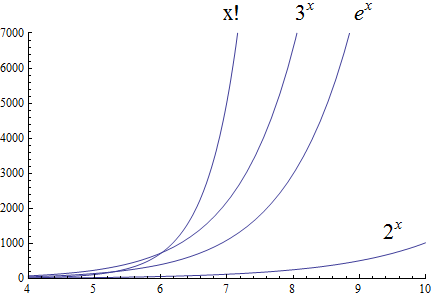

Factorial growth is larger than exponential growth:

| In the preceding graph, we’ve used \(x!\) to label the graph of the function \(y = \Gamma(x+1)\) , where \(\Gamma\) is the Gamma function which is defined and continuous for all nonnegative real numbers. That is, \(n! = \Gamma(n+1)\) for every \(n \in \mathbb{N}\). Further study of the Gamma function is beyond the scope of this textbook. |

Using the graphical analysis of the growth of typical functions we have the following growth ordering, also presented graphically on a logarithmic scale graph.

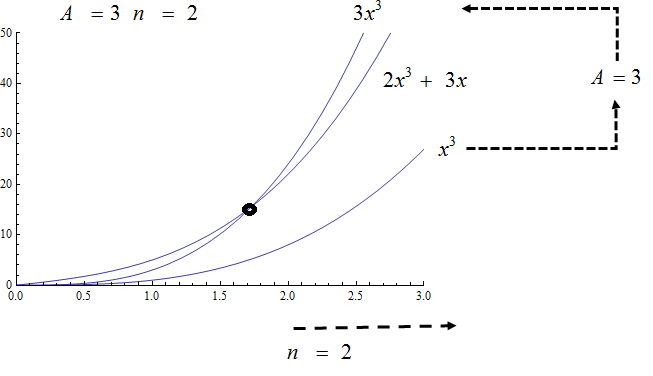

The asymptotic behavior for large \(n\) should be determined by the most dominant term in the function for large \(n\). For example, \(f(x)=x^{3} + 2x^{2}-2x\) for large \(x\), is dominated by the term \(x^3\). In this case we want to state that \(f(x)=O(x^3)\). For example \(f(1000) =1.001998×10^9≈ 1×10^9 =1000^3\). For large \(x\), \(f(x) ≈x^3\) or asymptotically, \(f(x)\) behaves as \(x^3\) for large \(x\). We write \(f(x)=O(x^3),\) that is, \(x^3 +2x^2-2x=O(x^3).\)

Likewise we want to say that if \(c\) is a constant that \(c \cdot f(x)\), and \(f(x)\) have the same asymptotic behavior for large \(n\), or \(O(c \cdot f(x))=O(f(x))\).

To show that a function \( f(x)\) is not \(O(g(x))\), means that no \(A\) can scale \(g(x)\) so that \( Ag(x) \geq f(x)\) for \(x\) large enough as in the following example.

12.4. Properties of Big O notation.

Suppose \(f(x)\) is \(O(F(x))\) and \(g(x)\) is \(O(G(x))\).

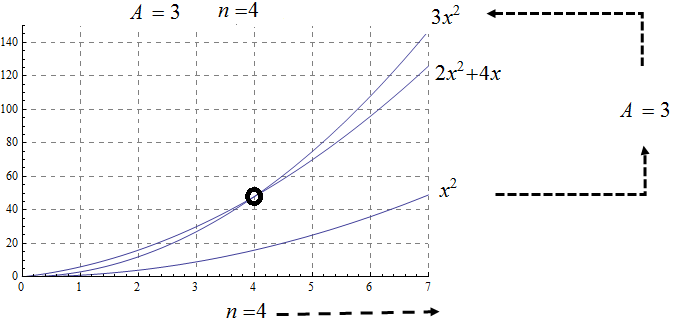

We can use these properties to show for instance \( 2x^2\) is \(O\left(x^2\right)\). Likewise if \(f(x) =2x^2\) and \(g(x) =4x\), then \( 2x^2\) is \(O(x^2)\) and \( 4x\) is \(O(x)\), and the maximum gives that \(2x^2+4x\) is \( O(\max(x^2, x)) =O(x^2)\).

It is true in general that if a polynomial \(f(x)\) has degree \(n\) then \(f(x)\) is \(O(x^n)\).

For example, if \(f(x)= x^3+1\) being \( O(x^3)\), and \(g(x)=x^2-x\) being \(O(x^2)\), then \(f(x) \cdot g(x)\) is \(O(x^3 \cdot x^2) =O(x^5)\). This is verified explicitly by multiplying \(f(x) \cdot g(x)= (x^3+1) \cdot (x^2-x)= x^5 -x^4+x^2-x \) which clearly is \(O(x^5)\)

As a final example we consider ordering three functions by growth using the basic properties for Big O and the basic orderings.

12.5. Using Limits to Compare the Growth of Two Functions (CALCULUS I REQUIRED!)

In general, the Remix avoids using calculus methods because calculus is part of continuous mathematics, not discrete mathematics. However, it can be useful to use calculus to compare the growth of two functions \(f(x)\) and \(g(x)\) that are defined for real numbers \(x\), are differentiable functions on the interval \((0,\, \infty)\), and satisfy \(\lim_{x \to \infty} f(x) = \lim_{x \to \infty} g(x) = \infty\). To avoid needing to use the absolute value, we can assume that \(0 < f(x)\) and \(0 < g(x)\) for all \(x \geq 0\) (This assumption is safe to make since both functions go to infinity as \(x\) increases without bound, which means that both functions are positive for all \(x\) values greater than or equal to some number \(x_{0}\)… we are just assuming that \(x_{0}=0\) which is the equivalent of shifting the plots of \(f\) and \(g\) to the left by \(x_0\) units.)

If \(f(x)\) and \(g(x)\) are such functions and \(\lim_{x \to \infty} \frac{f(x)}{g(x)} = L\), where \(0 \leq L < \infty\), then \(f(x)\) is \(O(g(x)),\) and if \(0 < L < \infty\) then \(f(x)\) is \(\Theta(g(x)).\)

To see this, recall that \(\lim_{x \to \infty} \frac{f(x)}{g(x)} = L\) means that we can make the value of \(\frac{f(x)}{g(x)}\) be as close to \(L\) as we want by choosing \(x\) values that are sufficiently large. In particular, we can make \(L-\frac{L}{2} < \frac{f(x)}{g(x)} < L+\frac{L}{2}\) be true for all \(x\) greater than some real number \(x_{0}\). Now we can use the earlier stated assumption that \(0 \leq g(x)\) to rewrite the inequality as \((L-\frac{L}{2}) \cdot g(x) < f(x) < (L+\frac{L}{2}) \cdot g(x)\), which is true for all \(x >x_{0}\). We can choose for our witnesses \(B = L + \frac{L}{2}\) and \(x_{0}.\) This means that \(f(x) < B \cdot g(x)\) whenever \(x > x_{0},\) which shows that \(f(x)\) is \(O(g(x))\). Furthermore, if \(L>0\) we can choose \(A = L - \frac{L}{2}\) as a witness for the lower bound, too, which means that \( A \cdot g(x) < f(x) < B \cdot g(x)\) whenever \(x > x_{0},\) so \(f(x)\) is \(\Theta(g(x))\).

Note that using this method does not focus on determining the actual numerical values of \(A\) and \(n\) but just guarantees that the witnesses exist, which is all that is needed to show that \(f(x)\) is \(O(g(x))\).

12.6. Exercises

-

Give Big O estimates for

-

\(f\left(x\right)=4\)

-

\(f\left(x\right)=3x-2\)

-

\(f\left(x\right)=5x^6-4x^3+1\)

-

\(f\left(x\right)=2\ \ \sqrt x+5\)

-

\(f\left(x\right)=x^5+4^x\)

-

\(f\left(x\right)=x\log{x}+3x^2\)

-

\(f\left(x\right)=5{x^2e}^x+4x!\)

-

\(f\left(x\right)=\displaystyle \frac{x^6}{x^2+1}\) (Hint: Use long division.)

-

-

Give Big O estimates for

-

\(f\left(x\right)=2^5\)

-

\(f\left(x\right)=5x-2\)

-

\(f\left(x\right)=5x^8-4x^6+x^3\)

-

\(f\left(x\right)=\) \$4 root(3)(x)+3\$

-

\(f\left(x\right)=3^x+4^x\)

-

\(f\left(x\right)=x^2\log{x}+5x^3\)

-

\(f\left(x\right)=5{x^610}^x+4x!\)

-

\(f\left(x\right)=\displaystyle \frac{x^5+2x^4-x+2}{x+2}\) (Hint: Use long division.)

-

-

Show, using the definition, that \(f\left(x\right)=3x^2+5x\) is \(O(x^2)\) with \(A=4\) and \(n=5\). Support your answer graphically.

-

Show, using the definition, that \(f\left(x\right)=x^2+6x+2\) is \(O(x^2)\) with \(A=3\) and \(n=6\). Support your answer graphically.

-

Show, using the definition, that \(f\left(x\right)=2x^3+6x^2+3\) is \(O(x^2)\). State witnesses \(A\) and \(n\), and support your answer graphically.

-

Show, using the definition, that \(f\left(x\right)=\ {3x}^3+10x^2+1000\) is \(O(x^2)\). State the witnesses \(A\) and \(n\), and support your answer graphically.

-

Show that \(f\left(x\right)=\sqrt x\) is \(O\left(x^3\right)\), but \(g\left(x\right)=x^3\) is not\(\ O(\ \sqrt x)\).

-

Show that \(f\left(x\right)= x^2\) is \(O\left(x^3\right)\), but \(g\left(x\right)=x^3\) is not\(\ O( x^2)\).

-

Show that \(f\left(x\right)=\sqrt x\) is \(O\left(x\right)\), but \(g\left(x\right)=x\) is not\(\ O(\ \sqrt x)\).

-

Show that \(f\left(x\right)=\) \$root(3)(x)\$ is \(O\left(x^2\right)\), but \(g\left(x\right)=x^2\) is not \$O( root(3)(x))\$

-

Show that \(f\left(x\right)=\) \$root(3)(x)\$ is \(O\left(x\right)\), but \(g\left(x\right)=x\) is not \$root(3)(x)\$.

-

Order the following functions by growth \(x^\frac{7}{3},\ e^x,\ 2^x,\ x^5,\ 5x+3,\ 10x^2+5x+2,\ x^3,\log{x,\ x^3\log{x}}\)

-

Order the following functions by growth from slowest to fastest. \(\ 3x!,\ {10}^x,\ x\cdot\log{x},\ \log{x\cdot\log{x,\ \ }2x^2+5x+1,\ \pi^x,x^\frac{3}{2}\ },\ 4^5,\ \ \sqrt{x\ }\cdot\log{x}\)

-

Consider the functions \(f\left(x\right)=2^x+2x^3+e^x\log{x}\) and \(g\left(x\right)=\sqrt x+x\log{x}\). Find the best big \(O\) estimates of

-

\((f+g)(x)\)

-

\((f\cdot\ g)(x)\)

-

-

Consider the functions \(f\left(x\right)=2x+3x^3+5\log{x}\) and \(g\left(x\right)=\sqrt x+x^2\log{x}\). Find the best big \(O\) estimates of

-

\((f+g)(x)\)

-

\((f\cdot\ g)(x)\)

-

-

State the definition of "\( f(x)\) is \( O(g(x))\)"" using logical quantifiers and witnesses \(A\) and \(n\).

-

Negate the definition of "\( f(x)\) is \( O(g(x))\)" using logical quantifiers, and then state in words what it means that \( f(x)\) is not \( O(g(x))\).